| 2014 - now | Postdoctoral researcher in computer graphics Technicolor, Rennes, France Fields: Procedural modeling, interactive generation and rendering, level-of-details, scalability |

| 2011 - 2014 | Ph.D. in computer graphics, graduated in february 2014 Technicolor, Rennes, France Manao team, LaBRI/INRIA, Université de Bordeaux I, France Interactive Generation And Rendering Of Massive Models: A Parallel Procedural Approach |

| 2007 – 2010 | Engineering degree in computer science (Master level) IFSIC/ESIR, Université de Rennes I, France Major in computer graphics and image processing |

| 2005 – 2007 | 2-year course in computer science Institut Universitaire de Technologie, Nantes, France |

Cyprien Buron

Technicolor Research and Innovation

975, avenue des champs blancs

CS 17616

35576 Cesson-Sévigné

France

E-mail: cyprien.buron 'at-sign' technicolor.com Tel: +33 (0)2 99 27 32 28

Cyprien Buron

Homepage

Curriculum

Publications

Dynamic On-Mesh Procedural Generation Control. Buron Cyprien, Marvie Jean-Eudes, Guennebaud Gaël, Granier Xavier. Siggraph Talk 2014.

[ Abstract ] Procedural representations are powerful tools to generate highly detailed objects through amplification rules. However controlling such rules within environment contexts (e.g., growth on shapes) is restricted to CPU-based methods, leading to limited performances. To interactively control shape grammars, we introduce a novel approach based on a marching rule on the GPU. Environment contexts are encoded as geometry texture atlases, on which indirection pixels are computed around each chart borders. At run-time, the new rule is used to march through the texture atlas and efficiently jumps from chart to chart using indirection information. The underlying surface is thus followed during the grammar development. Moreover, additional texture information can be used to easily constrain the grammar interpretation. For instance, one can paint directly on the mesh authorized growth areas or the leaves density, and observe the procedural model adapt on-the-fly to this new environment. Finally, to preserve smooth geometry deformation at shape instantiation stage, we use cubic Bezier curves computed using a depth-first grammar traversal..

PhD. Thesis - Interactive Generation And Rendering Of Massive Models: A Parallel Procedural Approach Buron Cyprien. February 2014.

[ Abstract ] With the increasing computing and storage capabilities of recent hardware, movie and video game industries always desire larger realistic environments. However, modeling such sceneries by hand turns out to be highly time consuming and costly. On the other hand, procedural modeling provides methods to easily generate high diversity of elements such as vegetation and architecture. While grammar rules bring a high-level powerful modeling tool, using these rules is often a tedious task, necessitating frustrating trial and error process. Moreover, as no solution proposes real-time generation and rendering for massive environment, artists have to work on separate parts before integrating the whole and see the results.

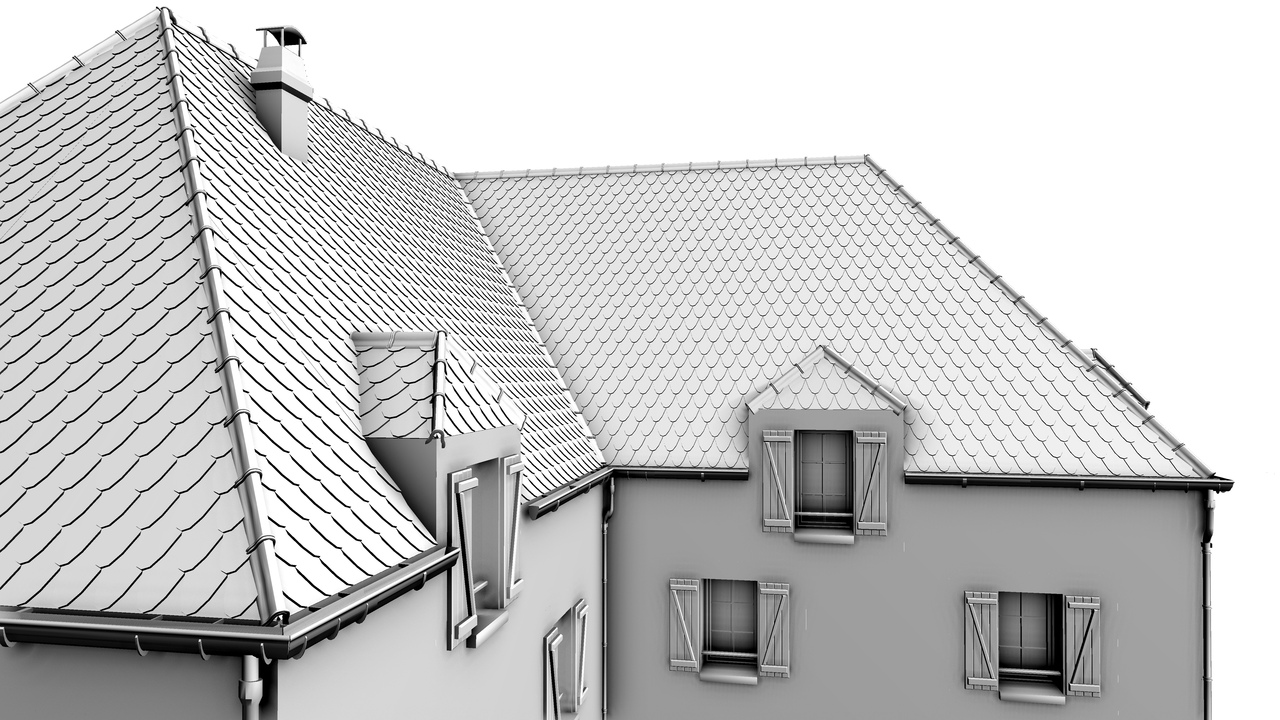

In this research, we aim to provide interactive generation and rendering of very large sceneries, while offering artist-friendly methods for controlling grammars behavior. We first introduce a GPU-based pipeline providing parallel procedural generation at render time. To this end we propose a segment-based expansion method working on independent elements, thus allowing for parallel amplification. We then extend this pipeline to permit the construction of models relying on internal contexts, such as roofs. We also present external contexts to control grammars with surface and texture data. Finally, we integrate a LOD system with optimization techniques within our pipeline providing interactive generation, edition and visualization of massive environments. We demonstrate the efficiency of our pipeline with a scene comprising hundred thousand trees and buildings each, that would represent 2TBytes of data if generated off-line.

GPU Roof Grammars. Buron Cyprien, Marvie Jean-Eudes, Gautron pascal. In proceedings of Eurographics 2013. May 2013. Girona Spain. Buildings and Stereo Short Papers Session.

[ Abstract ] We extend GPU shape grammars [MBG12] to model highly detailed roofs. Starting from a consistent roof structure such as a straight skeleton computed from the building footprints, we decompose this information into local roof parameters per input segments compliant with GPU shape grammars. We also introduce Join and Project rules for a consistent description of roofs using grammars, bringing the massive parallelism of GPU shape grammars to the benefit of coherent generation of global structures.

GPU Shape Grammars. Marvie Jean-Eudes, Buron Cyprien, Gautron pascal, Hirtzlin Patrice, Sourimant Gaël. In proceedings of Pacific Graphics 2012. September 2012. Hong Kong.

[ Abstract ] GPU Shape Grammars provide a solution for interactive procedural generation, tuning and visualization of massive environment elements for both video games and production rendering. Our technique generates detailed models without explicit geometry storage. To this end we reformulate the grammar expansion for generation of detailed models at the tesselation control and geometry shader stages. Using the geometry generation capabilities of modern graphics hardware, our technique generated massive, highly detailed models. GPU Shape Grammars integrate within a scalable framework by introducing automatic generation of levels of detail at reduced cost. We apply our solution for interactive generation and rendering of scenes containing thousands of buildings and trees.

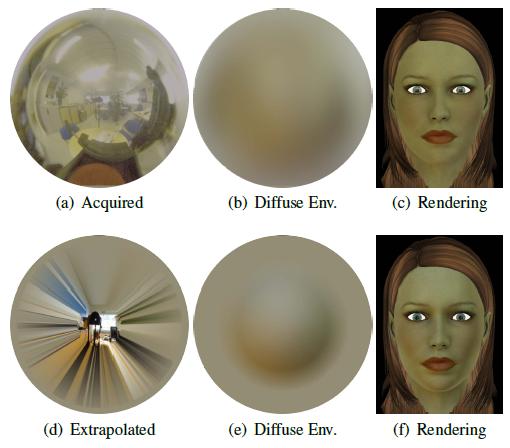

Grabbing Real Light - Toward Virtual Presence. Gautron pascal, Jean-Eudes Marvie, Cyprien Buron. In proceedings of the 27th spring conference on Computer Graphics. April 2011. Viničné - Slovak Republic.

[ Abstract ] Realistic lighting of virtual scenes has been a widely researched domain for decades. Many recent methods for realistic rendering make extensive use of image-based lighting, in which a real lighting environment is captured and reused to light virtual objects. However, the capture of such lighting environments usually requires still scenes and specific capture hardware such as high end digital cameras and mirror balls. In this paper we introduce a simple solution for the capture of plausible environment maps using one of the most widely used capture device: a simple webcam. In order to be applied efficiently, the captured environment data is then filtered using graphics hardware according to the reflectance functions of the virtual surfaces. As the limited aperture of the webcam does not allow the capture of the entire environment, we propose a simple method for extrapolating the incoming lighting outside the webcam range, yielding a full estimate of the incoming lighting for any direction in space. Our solution can be applied to direct lighting of virtual objects in all virtual reality applications such as virtual worlds, games etc. Also, using either environment map combination or semi-automatic light source extraction, our method can be used as a lighting design tool integrated for post-production.